SPAICR

Spatial AI for cooperative construction robotics

Principal Investigators

Prof. Dr. Kathrin Dörfler

TT Professorship for Digital Fabrication, Department of Architecture

Prof. Dr. Stefan Leutenegger

TT Professorship for Machine Learning for Robotics, Department of Informatics

Project contributors

Begüm Saral, M.A.,

Professorship for Digital Fabrication, Department of Architecture

Hanzhi Chen, M.Sc.,

Professorship for Machine Learning for Robotics, Department of Informatics

Motivation and Goals

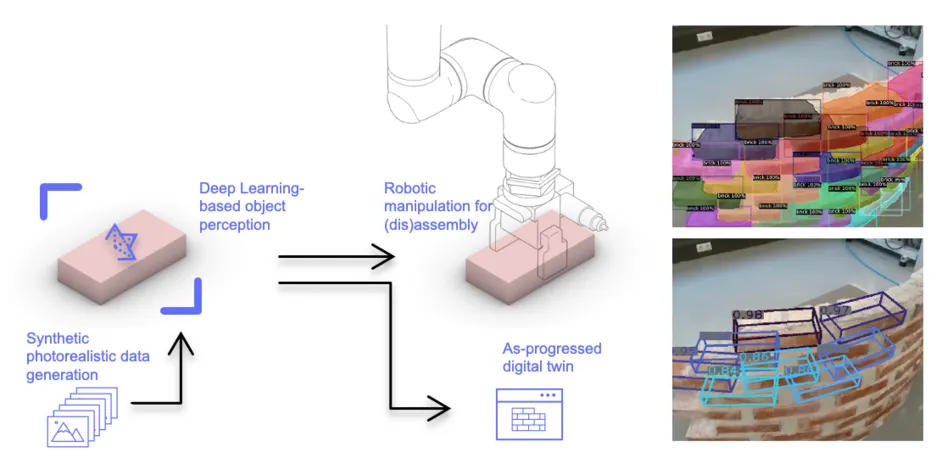

With SPAICR, we explore Spatial Artificial Intelligence (Spatial AI)—a real-time, vision-driven capability that enables mobile construction robots to understand and interact intelligently with their surroundings—allowing them to autonomously perform complex tasks directly on building sites. Our research advances methods for spatial understanding at both the object and scene levels, integrating them into robotic manipulation routines for complex assembly and disassembly tasks (Fig. 1). To assess these methods, we conduct experimental studies exploring the capabilities of Spatial AI-based techniques for autonomous operations in unstructured environments.

Methods

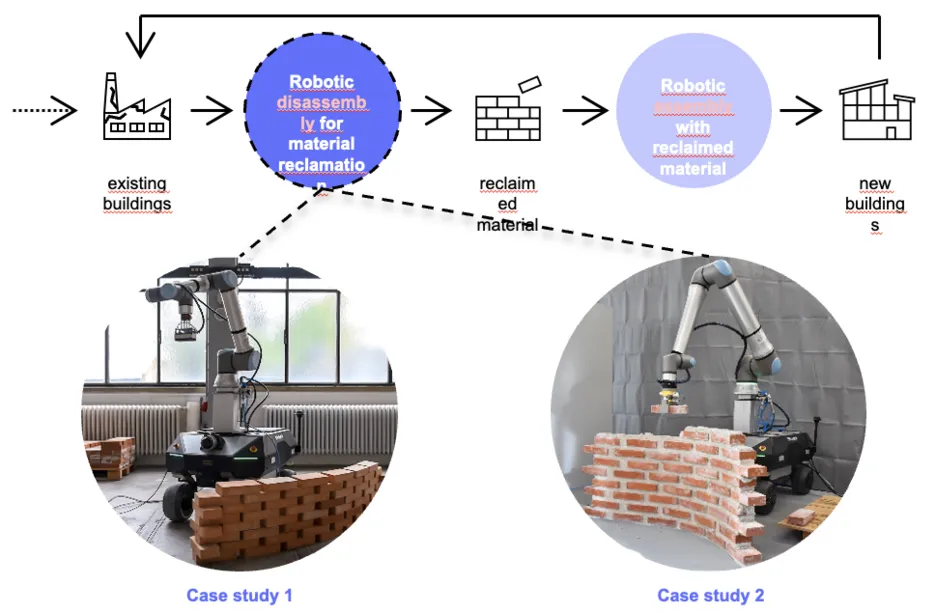

We developed a deep learning-based perception system to detect and estimate poses of building materials within existing structures (Fig. 2). Using a photorealistic synthetic data generator, the system trains neural networks to estimate brick poses in complex configurations and occlusions. Two case studies validated these methods in robotic disassembly of dry-stacked and mortar-bound brickwork, leveraging specialised manipulation routines (Fig. 3). During disassembly, an as-progressed digital twin updates semantic, spatial, and geometric data in real time.

Main Achievements

- Applied synthetic photorealistic data generation for discrete building blocks to train deep learning models, addressing the significant data requirements posed by the scarcity of datasets specific to construction materials.

- Demonstrated the effectiveness of deep learning-based object perception methods in facilitating on-site robotic disassembly for the reclamation and future reintegration of construction materials into new building cycles.

- Introduced an approach for generating an as-progressed digital twin of a disassembled structure, serving as a resource for documentation and potential reassembly purposes.•

Next steps

Considering the unique challenges of on-site construction and handling irregular materials, the following steps will involve integrating the deep learning-based object-level perception system with SLAM-based scene-level perception methods. These combined approaches will be validated through mobile robotic assembly routines using reclaimed materials.

Publications

- Anthropomorphic Grasping with Neural Object Shape Completion, H. Chen, …, S. Leutenegger, RA-L 2023

- Mobile Robotic Brickwork Disassembly, B. Saral, H. Chen, S. Leutenegger, and K. Dörfler, Rob|Arch 2024 Proceedings (forthcoming).

- Learning Object-Centric Neural Grasp Functions from Single Annotated Example Object, H. Chen, S. Leutenegger, ICRA 2024

- Robotic Deconstruction of Brickwork Structures Enhanced by Deep Learning-Based Object Perception, B. Saral, H. Chen, S. Leutenegger, and K. Dörfler, Automation in Construction, 2025 (in progress).

Awards

- New Frontiers Award, Runner-Up, Rob|Arch 2024, “Mobile Robotic Brickwork Disassembly”, 2024.