AI4TWINNING

Subproject: AI and Earth Observation for a Multi-scale 3D Reconstruction of the Built Environment

Principle Investigator

Prof. Dr.-Ing. Xiaoxiang Zhu,

Data Science in Earth Observation

Project contributors

Dr.-Ing. Yao Sun,

Data Science in Earth Observation

Zhaiyu Chen, M.Sc.,

Data Science in Earth Observation

Project summary

Recently big Earth observation data amounts in the order of tens of Petabytes from complementary data sources have become available. For example, Earth observation (EO) satellites reliably provide large scale geo-information of worldwide cities on a routine basis from space. However, the data availability is limited in resolution and viewing geometry. On the other hand, closer-range Earth observation platforms such as aircrafts offer more flexibility on capturing Earth features on demand. This sub-project is to develop new data science and machine learning approaches tailored to an optimal exploitation of big geospatial data as provided by abovementioned Earth observation platforms in order to provide invaluable information for a better understanding of the built world. In particular, we focus on the 3D reconstruction of the built environment on an individual building level. This research landscape comprises both a large scale reconstruction of built facilities, which aims at a comprehensive, large scale 3D mapping from monocular remote sensing imagery that is complementary to those derived from camera streams (Project Cremers), as well as a local perspective, which aims at a more detailed view of selected points of interest in very high resolution, which can serve as basis for thermal mapping (Project Stilla), semantic understanding (Project Kolbe & Petzold) and BIMs (Project Borrmann). From the AI methodology perspective, while the large-scale stream will put the focus on the robustness and transferability of the deep learning/machine learning models to be developed, for the very high-resolution stream we will particularly research on the fusion of multisensory data, as well as hybrid models combing model-based signal processing methods and data-driven machine learning models for an improved information retrieval. With the experience gained in this sub-project, we will lay the foundation for future Earth observation that will be characterized by an ever-improved trade-off between high coverage and simultaneously high spatial and temporal resolutions, finally leading to the capability of using AI and Earth observation to provide a multi-scale 3D view of our built environment.

Project (preliminary) results

Two working packages have been developed targeting a multi-scale 3D reconstruction of the built environment, focusing on large scale and high resolution, respectively:

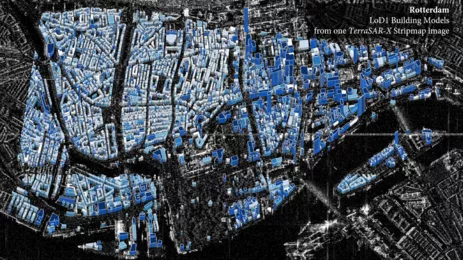

- For the large-scale stream, bounding box regression networks have been introduced to retrieve building heights from a single TerraSAR-X spotlight or stripmap image (Sun et al., 2022), enabling large-scale building reconstruction in Level-of-Details (LoD)-1. The proposed network can reduce the computation cost significantly while keeping the height accuracy of individual buildings. The algorithm has been deployed to generate city models of Berlin, Rotterdam, and New York.

-

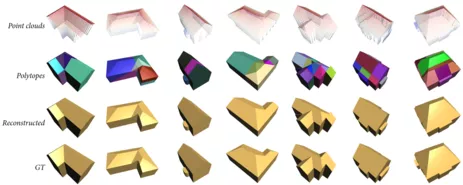

For the high-resolution stream, a novel framework has been developed which incorporates learnable implicit surface representation into explicitly constructed geometry for LoD-2 building reconstruction (Chen et al., 2022). Compared with state-of-the-art methods, the proposed framework can obtain high-quality building models with significant advantages in fidelity, compactness, and computational efficiency, with validation on real-world point clouds. As an extension, a series of polytopes-based graph neural networks are exploited in an end-to-end fashion. The extended method has shown promising reconstruction performance on a synthetic dataset covering all 291k+ buildings in the city of Munich.

The two working packages therefore can deliver building models in LoD-1 and LoD-2, thus providing a multi-scale 3D view of the built environment.

Project publications

Sun, Y., Mou, L., Wang, Y., Montazeri, S. and Zhu, X.X., 2022. Large-scale building height retrieval from single SAR imagery based on bounding box regression networks. ISPRS Journal of Photogrammetry and Remote Sensing, 184, pp.79-95.

Chen, Z., Ledoux, H., Khademi, S. and Nan, L., 2022. Reconstructing compact building models from point clouds using deep implicit fields. ISPRS Journal of Photogrammetry and Remote Sensing, 194, pp.58-73.