Radar SLAM for Autonomous Driving

- Sponsored by: ASTYX GmbH

- Project Lead: Dr. Ricardo Acevedo Cabra

- Scientific Lead: Dr. Georg Kuschk

- Co-Mentor: Fabian Wagner

- Term: Summer semester 2020

Results of this project are explained in detail in the final documentation and presentation.

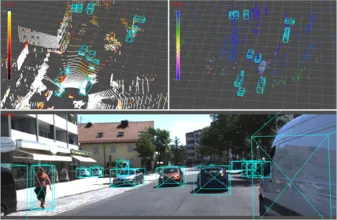

Goal of this project is to apply classical and deep learning based approaches for co-registering multiple 3D radar point clouds and computing 6DoF relative poses between 3D radar point clouds in the context of self localization and mapping (SLAM) using radar sensors.

SLAM is a well established technique for localization and mapping and a fundamental component for robotics and autonomous driving. While camera and Lidar based approaches are well understood, automotive radar sensors until recently did not provide reasonably dense and accurate sampled measurements of the environment.

Work packages

- Literature research (sparse point cloud approaches vs camera-SLAM & Lidar-SLAM)

- Evaluate point cloud based classical algorithms on radar point clouds

- Evaluate state-of-the-art neural network approaches on radar point clouds

- Comparison against public camera/Lidar approaches using the existing multi-sensor setup

- Improvements via segmentation of static/dynamic objects

- Improvements via advanced surface estimation