Multi-Agent Reinforcement Learning for Logistics

This project was for summer term 2020, you CAN NOT apply to this project anymore!

- Sponsored by: MaibornWolff GmbH

- Project Lead: Dr. Ricardo Acevedo Cabra

- Scientific Lead: Dr. Lenz Belzner, Jorrit Posor

- Co-Mentor: Oleh Melnyk

- Term: Summer semester 2020

Results of this project are explained in detail in the final documentation and presentation.

Domain: Decision-making in complex and stochastic logistics systems is a major challenge. To optimize resource efficiency like the utilization of a railway network or the performance of autonomous robots in a chaotic warehouse, many providers of logistics services rely on heuristics. Due to the large sizes of logistics systems, a minimal improvement (e.g., by 1%) of the decision-making processes translates into millions of saved costs and a significant reduction of C02 emissions.

Methods: The use of Artificial Intelligence reports phenomenal results in complex decision-making systems. Techniques like multi-agent reinforcement learning (MARL) surpass human performance in highly complex domains like StarCraft 2. The data science & artificial intelligence department at MaibornWolff cooperates with providers of logistics services to apply these state-of-the-art methods to logistics systems.

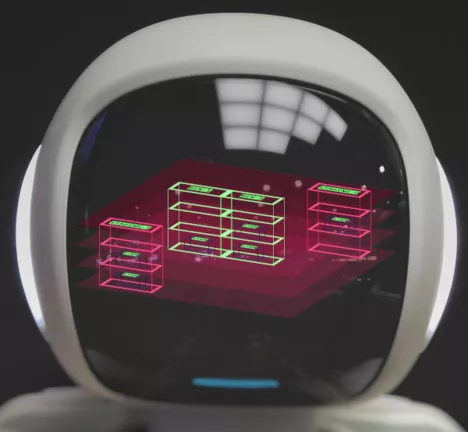

Goal: The goal of this project is to optimize the logistics process of a simulated chaotic warehouse (CW). The metric to minimize are agents’ average steps taken to finish transactions. This optimization can be done through two methods: Through smart modeling of the CW and/or the implementation of a sophisticated MARL algorithm.

A chaotic warehouse consists of the following components:

- items ·

- staging-in-area (one): items of an inbound-transaction arrive here

- staging-out-area (one): items of an outbound-transaction need to be delivered here

- bins (multiple): hold items

- agents: are moving in the CW to put and pick items

- inbound-transactions: specify bundles of items to be picked from the staging-in-area and put into bins

- outbound-transactions: specify bundles of items to be picked from bins and delivered to the staging-out-area

Starting Point: As a participant for this project, you will get access to the implementation of a baseline algorithm and the simulation environment of the CW (Python Code). The baseline algorithm is based on Q-Learning and consists of a Deep-Q-Network. To meet the goal, you can use the provided code to develop your solution.

What you’ll learn: From playing games to driving to controlling fleets of autonomous trains/robots to optimally distributing e-scooters in a city: Many problems require decision-making over time in dynamic environments. Throughout this project, you’ll understand how real-world decision-making processes can be modeled to simulate these processes. Furthermore, you’ll learn how AI can be used to learn optimized strategies for these processes.