- Sponsored by: PwC

- Project lead: Dr. Ricardo Acevedo Cabra

- Scientific lead: Stefan Wagner, Yuqing Huang, Henri Petuker, Oliver Kobsik

- TUM co-mentor: TBA

- Term: Winter semester 2025

- Application deadline: Sunday 20.07.2025

Apply to this project here

About PwC

The PwC network spans the whole globe and employs nearly 370,000 people. As part of the global PwC Network, PwC Germany is well-established, having 20 locations and 15.000 employees all over the country. We are constantly on the lookout for new colleagues who are enthusiastic about innovation. Every day, PwC Germany is confronted with new challenges and issues, which we always address with our values in mind: We act with integrity, we make a difference, we work together, we care for others, and we reimagine the possible day by day.

In our practice team 'Data & AI', in Financial Services Transformation Consulting, we advise our clients - multinational banks, insurance companies, and finance-oriented corporates - on the digital transformation of their enterprises. This includes the areas of Data & Analytics, Big Data, and Artificial Intelligence, including Generative and Agentic AI.

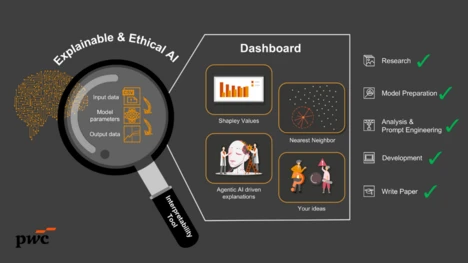

About the project “Deep-Dive in Explainable and Ethical AI with Generative and Agentic AI”

Are you interested in researching and developing state-of-the-art explainable and ethical AI solutions that comply with future regulatory standards?

Problem statement: In the context of rapid AI developments, sectors such as finance, healthcare, and transportation have experienced significant benefits. However, the widespread implementation of AI systems has raised critical concerns, particularly around transparency, fairness, and ethical considerations. As AI technologies continue to evolve, there is an imperative need to ensure that these systems are not only effective but also transparent and ethically aligned with societal values and regulatory requirements.

Deep Learning Networks are renowned for their effectiveness on a variety of tasks, but they often function as "black boxes", rendering their decision-making processes opaque and difficult to interpret. GenAI presents a promising opportunity to address this challenge by offering explanations for the complex operations of Deep Learning models. Through the strategic application of GenAI, we want to explore innovative methodologies to enhance the interpretability and transparency of deep learning networks.

This project responds to the urgent need for explainable and ethical AI by investigating novel and unconventional solutions that incorporate GenAI, while remaining compliant with regulatory frameworks. The team will engage in pioneering research and development to create AI solutions that are transparent, fair, and ethically sound. These solutions must be trusted and understood by both users and stakeholders. By advancing this critical area of AI, the project contributes to shaping a future in which AI technologies are not only state-of-the-art, but also socially responsible and legally compliant.

Objective: Our goal is to develop a tool that incorporates established methodologies such as Shapley Value and Nearest-Neighbor approximation. In addition, we aim to research and integrate GenAI and Agentic AI approaches to enhance the transparency and ethical comprehension of given specific deep learning models. We also intend to utilize our solution to compare similar deep learning models with each other, highlighting and interpreting their differences on the same use case. The tool should offer a suite of interpretative features that enable users to visualize and understand the decision-making process of AI models as required. Concurrently, it will explore ethical guidelines to ensure fairness and compliance with potential regulatory standards.

Apply to this project here